Image Credit: Dennis Schroeder / National Renewable Energy Laboratory

In the past year, a growing number of papers from economists have questioned the effectiveness of energy-efficiency programs and policies. We have reviewed many of these studies and blogged about several of them (see here, here, here, and here).

In general, we have found that some of these studies have useful lessons, but too often they miss the mark because they miss some key issues in the programs they are evaluating, or they seek to over-generalize their findings to programs very different from the ones they evaluated. But rather than continuing a tit-for-tat debate, I want to go past some of these details and look more broadly at how economists and energy-efficiency practitioners can better avoid these past problems, better understand each other, and better work together.

There is much to learn

First, we admit that not all energy-efficiency programs are stellar. It’s critical to have good evaluation to help tell what is working well and what needs improving.

For example, one of the useful findings from the recent but controversial Fowlie et al. evaluation of the low-income weatherization program in several Michigan communities is that the energy audits in this program were overestimating the energy savings that can be achieved. Fortunately, as my colleague Jennifer Amann recently wrote, other research has found that calibrating audits to actual energy bills can do much to address this problem. This is an example of how identifying a problem can help lead to solutions.

Getting beyond paradigms to discover the truth

Second, there is a tendency, in both the economics and energy-efficiency communities, to work from established paradigms and work with colleagues who share similar views. When the two communities meet they often talk past each other.

There is a need for both sides to better understand where the other side is coming from, and to explore opportunities to find a middle ground. For example, many economists look for rigorous evaluation, preferring what they call the “gold standard”: randomized control trials in which a large group of potential participants is randomly assigned to either a study or control group.

But randomized control trials can be very difficult to implement, as the recent recipient of the Nobel Prize in Economics has discussed. This is particularly a problem for full-scale programs in which everyone is eligible and random assignment to a control is not possible. On the other hand, the energy-efficiency community in recent years has increased use of “deemed savings estimates,” since these are easier to use and provide certainty for program implementers.

Deemed savings estimates are supposed to be based on prior evaluations, but these evaluations are not always as rigorous or frequent as would be ideal. Perhaps the two sides could agree on more frequent “quasi-experimental” studies that carefully select a control group that is not randomized.

The need to be objective

Third, both communities need to be fair and objective when they conduct studies, and not seek to bias the results or report valid results in a biased manner.

Study designs that implicitly tilt the playing field in one direction are more rhetoric than useful investigation of what is happening. Examples of tilting the field include studies that look at only costs but not benefits (see here for an example); include extra costs unrelated to energy efficiency (e.g. home repair costs); leave important costs out, such as changes in maintenance; or are based on a simple cost-benefit framework without considering other goals that the programs might have.

Likewise, each program is different and one problematic program should not cast doubt on all of the others, particularly dissimilar programs. Conclusions can only be generalized to similar programs.

Combining skills to create the best research possible

So how can we better work together? First, rather than each community conducting separate studies, perhaps economists and energy-efficiency practitioners can jointly work together on some studies, as each profession brings useful skills, perspectives and information.

Economists tend to be good at research methods and statistics but they don’t always understand the markets they are evaluating. By coupling economists with knowledgeable practitioners, many of these problems can be avoided. Likewise, it would be useful to have the other community review studies before they are published, allowing problems to be identified and corrected before publication. Similarly, the two communities can work together to identify good programs that are worth studying, rather than marginal programs that are not typical.

Finally, when results are obtained, it can be useful to look not only at the results but why the results happened. In this way, studies can achieve what perhaps we can all agree is the intended purpose: to understand what works, and to improve what falls short.

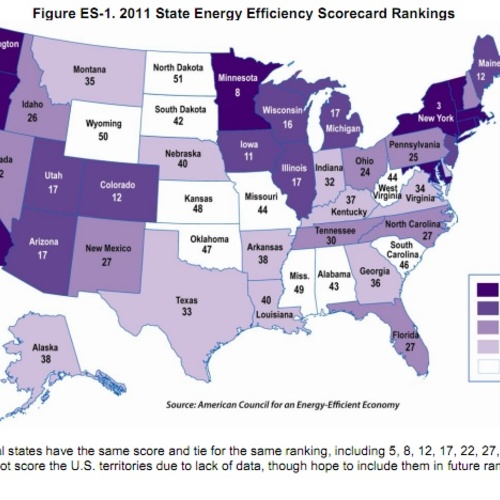

Steven Nadel is the executive director of the American Council for an Energy-Efficient Economy. This post originally appeared on the ACEEE Blog.

Weekly Newsletter

Get building science and energy efficiency advice, plus special offers, in your inbox.

4 Comments

This blog is the same as it's subject!

Slanted. Too funny.

Good Faith?

The author seems to assume that agendas are not at play here. The goal is not always fact seeking; sometimes it is just expressing an opinion which fits the narrative of your organization.

energy savings

Using pre- and post- construction meter data, an important result of the “controversial Fowlie et.al evaluation” (http://e2e.haas.berkeley.edu/featured-eeinvestments.html ) is that actual savings were only 39% of NEAT-projected savings. The author suggests that the E2e study was flawed because they didn’t calibrate “audits to actual energy bills”. But, they didn’t need to “calibrate” to energy bills: they went a step further and actually used the energy bills.

An important message from the E2e study is that we need more than modeled projections: we need post-retrofit consumption data. And we need to look more closely at the mechanism that supports a projection of energy saved by installing a product, without any way to measure actual use.

deep thinking? But. ..

Nature.... population. How possibly can yaa all be so far from the mark?

Put whatever in your equation and whatever is the result...

You're wasting your time down the wrong rabbit hole kids.

Nature... the nature of.... and what is... and will be... is... what is.

Log in or create an account to post a comment.

Sign up Log in