How to handle excess heat from electronics properly

I’m not sure if this is the right category for this and it’s probably going to be a bit long winded, but I want to give as much detail as I can:

I recently bought my first house. Being an IT nerd myself, I like to run a fair bit of electronics at home. My home network is more akin to a small business. With energy costs being what they are right now, I’ve become more conscientious when it comes to power, and reduced or upgraded to more power efficient hardware wherever possible, but with electronics inevitably comes heat.

I’ve made my office in one of the spare bedrooms. All of the network and small server equipment is in a closet in that room at the moment, and the room typically sits about 10 degrees hotter than the rest of the house, if not more. At the moment it is 79 degrees Fahrenheit in the office and 68 on the first floor of the house at the thermostat. This is, as you might assume, a bit of a problem since 80 isn’t exactly comfortable and I spend about 75% of my waking hours in this office.

The house is brand new. Just completed in May 2022, just North East of Denver, CO. Right now I have an Ecobee Smart Thermostat (newest model), which is installed in the kitchen on the first floor, and one of the smart sensors it came with in my office. The sensor relays temp back to the thermostat which then tries to cool down the office, at the expense of making the first floor so cold that I could have penguins as pets.

The room this closet is attached to does not have a return vent at the moment. Just a vent above the door to the hallway, where the return is located for this end of the house. My thought is to add a 12″ return vent in the closet where the equipment is and using an inline fan like the AC Infinity Cloudline to draw the air out and feed it back into the system but I’m wondering if that would be harmful, beneficial, or not do anything at all.

;tldr: I have a computer addiction, and they make too much heat. What can I do with the heat?

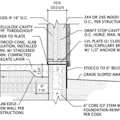

GBA Detail Library

A collection of one thousand construction details organized by climate and house part

Replies

The ideal advice is to redirect your electronics hobby towards an obsession with minimizing electricity consumption. If a laptop can run for 8 hours on a small battery, a desktop that uses hundreds of watts is an embarrassment, and an obsession with minimizing power can be a rewarding focus.

But given that you've already done some of that, here's some other advice, while we wait for Bill to come along, because he's actually a professional in this field.

First, consider using outside air to cool the electronics. They are probably happy at 90° F even when you aren't. So it may be possible to simply isolate them and circulate outside air through the electronics cabinet, with some filtering on the intake.

But your idea of having a return vent from the closet is in fact a good one. Your air conditioner will be (perhaps counterintuitively) more efficient working from higher temperature return air, and you will also keep more of that heat out of the office, and keep the server closet cooler.

Another consideration is what your ventilation system is for the house. If you need some mechanical ventilation and you don't have that yet, and option is to implement an exhaust only ventilation system, exhausting the air from the closet.

My understanding of your climate is that you have cool nights. There might be some potential to add a lot of thermal mass to the closet, and do outside air cooling at night and then rely on that thermal mass to absorb the heat generated during the day.

If you measure the power consumption we can be more specific about how much heat is generated and things like air flow rates or amount of thermal mass to address that.

You need more cooling in your equipment room. You're probably not going to be able to move enough air to cool enough without reworking your whole system.

I've used portable air conditioners in similar situations in offices. Something like this: https://www.delonghi.com/en-us/products/air-comfort/pinguino-portable-air-conditioners

They exhaust heat with a flexible hose. If you can route the hose into a hallway -- ideally close to a return -- then it just adds to the AC load.

Yep, this is my field -- I bill lots and lots (and lots!) of hours to customers to keep their equipment cool, usually with clever methods that also minimize energy consumption.

Charlie is right -- one of the ways to maximize cooling efficiency is to avoid the mixing of fresh, cool, "conditioned" air with hot equipment exhaust air as much as possible. In the world of datacenters, we do this by walling off either the hot or cold isles in facilities, usually the cold isles, in which case we call it "cold isle containment". You can also do "hot isle containment", and sometimes even both. I've often found hot isle containment to actually work better, but a lot of the times it comes down to the layout of the room.

A spot cooler such as DC suggested should be your last resort. Those are energy hogs, and they are another maintenance item and also make more noise. I'm not a fan of those things, and I view them as emergency cooling units. For smaller equipment rooms, I like to put a minisplit on the wall. Minisplits are readily available up to around 3 tons, which are good for around 9-10kW or so of equipment in practice, but if you only have one unit I'd try for about half that much.

The easiest option for you is probably to mount an air return as you planned, high up in the closet, to exhaust the hot air. Make sure there are louvers on the lower part of the closet doors (assuming you keep the doors closed most of the time), since you want "cool air in at the bottom, hot air out at the top". This way the cooling works WITH convection instead of against it. This is somewhat similar to how the raised floor cooling systems work in large datacenter facilities. If you have only 1-2kw worth of heat to get rid of, chances are good this return will be all you need if you also keep the closet doors closed most of the time, or if you can find another way to contain the heat so that the heat doesn't come back and mix in your office space. Remember containment is your friend here -- keep the hot exhaust air hot will improve efficiency, you don't want it to mix with cool air.

If you have a basement, try putting your server stuff down there, and then use remote console software for management. This way the heat -- and the noise -- is out of your office. In my own home, I have my network stuff, and my central UPS, in the basement. I have only my normal office computer and printer in my office. This is great for keeping noise down, and also keeps some of the heat out of my office too. You could potentially ventilate with outside air, I know you get cool nights as I have family in Aurora, but you can have hot days so you don't want to do it all the time. You also loose control of humidity when you use outside ventilation, which in your climate means very dry air, and that means STATIC ELECTRICITY, which WILL reduce the lifespan of your equipment. We try to maintain or telecom facilities at around 40% RH to keep static under control for this reason. You also would be amazed how much dust and grit will be drawn in from the outdoors if you ventilate with outdoor air. You need a pleated AND BAG filter to deal with it.

Another option is to colocate some of your server equipment into a datacenter. Some datacenters cater to smaller customers, even single servers. I actually used to run such a facility, with several thousand servers, but now focus more on the enterprise space (if you need a few hundred square feet or more, my new company has a 76,000 square foot Tier III facility that is nearly empty and we'd love some new tenants ;-). Datacenters will tend to have vastly better network connectivity than you will have at home, and usually have good power backup (UPS and generator) systems too. Since large datacenters pay industrial electric rates, their power costs are lower than residential users. What this means -- and I have seen this happen for my customers -- is that you can often move equipment from your home to a commercial facility that is built for this purpose and end up SAVING MONEY over hosting things in your house due to the savings on electricity alone. That might be something to think about depending on what your system requirements are.

BTW, I have designed facilities for customers before that are cooled exclusively with outdoor air (no air conditioners). This is a big cost savings since about 40% of the power consumed by datacenter and telecom facilities is used to run the cooling systems. The downside is that your equipment will run hotter in the summer, and for most equipment this is a problem, and you have more issues with dirt and humidity control. All the facilities I've designed for outdoor air use have been cryptocurrency mining facilities. Their equipment is able to run hotter safely compared to normal computers, and energy costs are far and away their biggest concern, so EVERYTHING else is secondary -- even backup power. They have to duct all the airflow though, with hot AND cold isle containment, and duct fan systems. They are interesting facilities, with crazy high power densities (sometimes up in the kilowatt per square foot of floor space range), but they aren't really suited to regular computer systems or network equipment. All the normal facilities use verious cooling systems, with regular "direct expansion" (refrigerant-based) cooling systems in smaller facilities, glycol systems in medium facilities (which can have an "econo" option that can cool without the compressor if the outdoor air is cool enough), and chilled water systems for the largest facilities. I like the chilled water systems, they have some big advantages when paired with evaporative cooling towers or cooling ponds, but they aren't practical to scale down to small systems.

Bill

Let's run some back-of-the-envelope numbers. Let's assume the load is 1,000 Watts, or about 3,000 BTU/hr or a quarter ton.

Right now the office is 11 degrees warmer than the rest of the house, and OP says that's unacceptable. Let's say a 5F delta is acceptable. To do it with return air, you would have to move 3,000 BTU/hr with a 5F delta, that's about 600 CFM. Looking at a handy duct sizing chart that requires a 14" round duct. I will posit that slipping a 14" round duct into most residential construction isn't practical.

It's a little more practical to do it with cooling. Using the rule of thumb that it takes 400 cfm to move a ton of cooling, that means 100 CFM, supply and return. The duct chart tells me that's two 7-inch ducts. Slightly more practical but still not something you can slip in unobtrusively.

The portable air conditioner has all of the faults you mentioned. At the same time, it has the advantage that you can wheel it into a room, maybe cut a hole in the drywall for the exhaust, plug it in and it works.

That said, I think the best advice is to get all of that equipment to a datacenter. I consider myself a technologist and the only devices I have in my own home are a laptop and a phone.

Fortunately, we have the opportunity to remove hot air from the closet rather than removing hot air from the room. The closet might be 15 degrees higher than the general building temperature while having the office temperature less than 5 degrees above the general building temperature. So the required air flow might be only 200 CFM rather than 600 CFM.

Typical server exhaust air is 90-100+ *F. The reason hot/cold isle containment improves efficiency is because it limits mixing. In a datacenter, you walk down the cold isle and it's ~70*F or so, and blowing up at you from underneath, like the famous Marilyn Monroe picture over a sidewalk grate. Go through a sliding door into the hot isle and it's well over 90*F, with hot air blowing at you from the racks. It's also LOUD LOUD LOUD all the time, everywhere, from all directions. All the air everywhere is moving at pretty high velocity. This is very different from a typical office or residential enviornment.

Typical thermal delta between hot and cold air can be 20-30+ *F, which allows for a lot more BTU movement ber unit airflow. With your other numbers, that means we only need about 93 CFM to move that 1kw worth of heat. That's the whole point of containment -- exhaust the hot air before it mixes with cool air, so that you can move more heat with less effort. We don't even care what the thermal delta is, there is no "acceptable", since we actually want to keep the hot air as close to the temperature it was coming out of the equipment when we get it to the exhaust space (which is usually the ceiling area in a datacenter). If the techs complain when they're working in the hot isle, we tell them to wear shorts :-D Creature comforts are secondary to the needs of the equipment in these facilities.

Bill

Hey Bill,

Thanks for the thorough response! I've worked in datacenters as well, so I'm familiar with the hot/cold aisle concepts. I've spent countless hours at Iron Mountain in Denver, sitting in hot aisles working on servers and networks for maintenances, outages, migrations, etc. I actually considered doing something like this by replacing one of the closet sliding doors with a 19" rack and using the closet as the hot aisle. Technically I could fit a rack there. The closet is 703mm from the back wall to the outside of the door frame. My concern there is the longest piece of equipment (HPE DL380 Gen9) is 679mm long ("deep"?), leaving 24mm of space between the wall and the back of the server. Not enough clearance for the power cable. So I'm thinking sideways inside the closet, since it's 601mm front to back, and like 1.8-2m across. Plenty of space for a rack, plus a little bit of storage space for extra stuff.

I'm currently hunting a 15u-24u enclosed rack that, 1. I can afford, because those suckers are a bit pricey, and 2. will fit in the closet with minimal modifications to the closet (probably have to pull off the baseboards inside). My thought is that if I can get an enclosed rack in there, lift it off the floor a bit with some 2x4's to create a channel for air to be pulled in from the office, and then I could use some insulated flexible duct to connect the top of the rack up to the return in the ceiling (possibly just replace the vent with a collar to attach directly with no gaps). I'm thinking I may make a 3d mockup, but as I'm not an expert on 3d modeling, it'll probably take a bit.

I thought about the colo idea, and might end up going that direction in the future if I can find one that's cheaper than the electric bill for running stuff here, but at the moment most of what I'm running is really for the home automation and needs to be kept onsite, for latency reasons and functionality if/when Comcast goes down. I've built out many offices where they hosted everything in a colo, only to have the IPsec drop mid-day and take out LDAP, print server, file shares, email, etc. For me it wouldn't be quite that dire, I would just lose file shares and wouldn't be able to control the lights, locks, cameras, etc, but right now one of my projects is removing the dependency on internet for some of those things. Might be able to get away with containers for the smaller/more essential stuff at home and move the heavy compute to a colo. Just depends on what I can find for pricing for a colo really.

As far as heat output of the equipment goes, I would estimate under 1kw. Right now I have an older gaming desktop in the closet that's acting as a server while I was working on the build of my new actual server. The PSU in that guy is 800W (unsure of efficiency). A couple components I know off the top of my head: 91w TDP CPU, 180W TDP GPU, 16GB DDR4, 2x 1TB 3.5" spinning disks, a couple SSD's, LED lights (for more horsepower of course). It's a space heater. The new server is dual Xeon E5-3650L-v4 (65W TDP), 4x 8TB 3.5" spinning disks, 2x 2.5 SSD's, and 64GB of DDR4, and a single 500W PSU with 92% efficiency at 50% load (guessing around about 150W at 50% load). Aside from that I have a single 24port non-PoE switch (max 56W), Fortigate 60E (max 15w), Arris gateway (I think 24W).

Lots to think about in terms of options. For time short term, probably going to have to deal with some heat and use fans to move the air out to the return in the hallway until I can get up in the attic and take a look at what's involved in creating that new return and tying it in. After that it's either enclosed rack project or colo, whichever ends up being cheaper. With the price of smaller racks being what they are, a colo may be cheaper.

I recommend that you get an inexpensive power meter such as a Kill A Watt and measure the actual power consumption over some hours. The numbers you are listing are the maximum not the actual operating average consumption.

I also suggest that you think critically about what's really needed to run home automation. The latency in a gaming setup needs to be very low, but the latency in home automation can be literally orders of magnitude slower.

I have been meaning to pick up a Kill-A-Watt for a while now, and for a lot of this stuff, that would probably come in really handy. The server I'm planning to run has it's own management system built in, called iLO. That system has energy monitoring, including actual idle/peak wattage and I believe BTU's (I know Dell iDRAC shows actual BTU but I'm not sure about HPE iLO). I attached a screenshot of the power management console on my old (and I mean OLD) server that I'm replacing with the newer, much more power efficient one. This thing had dual 875W power supplies with no 80-Plus rating, because that rating/standard didn't exist when it was built.

Since the server is a built as a virtualization host, it will be running more than a single server. It's going to host a few gaming servers, kubernetes nodes, a couple databases, RADIUS, and probably a few other things I haven't thought of at the moment. I did the math on it a while back and it quickly became clear that cloud equivalent needed to run what I want to was too expensive. The equivalent amount of resources in the cloud would cost me almost 10x what the hardware and projected energy usage will over a 5 year period. I've worked in the cloud for years (AWS specifically) and I'm a huge advocate for cloud migration in most cases. For large scale deployments cloud makes way more sense, but for small scale, like my use case with a single hyperconverged server, cloud is actually a lot more expensive.

A lot of the home automation stuff could be hosted out on the cloud for sure, but the potential issue there isn't necessarily latency (except with lighting automation, then latency is more of a concern because of how noticeable the delay can be). The issue is more availability of the services. Keeping everything local means that even when the internet goes down, as it does, you can still control your lights, blinds, locks, robo-dog, whatever else has been connected not only manually, but the automations designed for convenience and/or reducing energy usage continue to function normally.

I'm aware that running this type of hardware at home is a bit unusual to most people, and my girlfriend would agree with you, but it makes me happy to have something to work on. Some people restore old cars, some people do woodworking, I fiddle with servers and coding. A lot of what I do in my lab environments translates into valuable experience for situations that come up in my line of work. It's a big part of how I increased my income almost 5x in 4 years.

Thanks for the reply. I'm not arguing against having hobbies, or against this hobby. I'm actually intending to cheer it on, and to embrace full-on geekiness about it, inclusive of energy optimization.

And I'm not suggesting off-site hosting for home automation. I'm suggesting that it could be handled by a very low power on-site machine, perhaps something like a raspberry pi or even smaller running a very low clock speed.

But then you mention a few gaming servers and some other stuff. I'm not sure how many hours a week that stuff needs to run, but if you can have one system supporting home automation, running 24/7, and something else you fire up as needed, that could give you a lot of savings.

You usually want your cold supply air to go through a perforated front door of the rack, and let the hot exhaust air go out the back of the rack. The old "in the bottom, out the top" with glass fronts and steel backs hasn't been done in a long time. The general trend in datacenters has been towards higher thermal/power density. When I started, typical densities were around 60 watts per square foot or so. Now "regular" is about 135 watts/sqft, and high density is 200+ watts/sqft. I usually design facilities for around 135-160 watts/sqft for most users, with the high density facilities more specialized and therefore more expensive. Our new facility at work has different zones for different densities, starting at around 135w/sqft, with the highest active area around 195w/sqft -- but we have the ability to go even higher on request by beefing up the cooling in some spaces if needed.

Standard rack depth is 40". You want a "square hole" rack, so that you can use the snap-in rails (Dell calls these "rapid rails"). The rapid rails are much easier to work with compared to many of the oddball styles that are out there. You also do NOT want to use the rear cable support arm that is an option with many rack mount servers. The arm looks nice and keeps the cables neat, but it also obstructus the hot air at the back of the equipment which will make the equipment run hotter. Just use some velcro for cable management and don't bother with the arm.

What I would do is get a rack with a perforated front and rear door, then configure the closet so that the hot side of the rack exhausted into the closet and the front of the rack faced the office for cold air intake. You could probably do this by putting the rack in the closet sideways with one of the two bifold doors open and the other closed. Use some carboard, 1/8" hardboard, or -- my preference -- some 0.06" PETG plastic sheet to "seal" around the rack between the two sides of the closet. PETG is great here, it's a blend of polycarbonate and acrylic that has most of the durability of polycarbonate at a price closer to acrylic. I punch holes with a hand punch (not a paper punch, a handheld metal punch) at work so that I can hang sheets of the stuff with zip ties into wire mesh panels of customer cages to keep hot and cold isles seperated. The material is cheap, easy to work with, and clear (usually, I think you can get it in some colors too).

Put your return vent in the hot side of the closet so that it pulls the hot air out. You might still want a fan on the vent so that it's ALWAYS exhausting air. If you've been in datacenters, I'm sure you're aware that the fans in the CRACs never stop, only the compressors cycle (or three-way valves on chilled water systems modulate) to maintain temperature, but you have full CFM airflow 24x7. You want to emulate that in your home setup so that your equipment sees reasonable constant temperatures, and a simple exhaust fan blowing into the air return is probably enough to accomplish that.

Try DSI Racks in Texas. I used to know one of the owners down there. They make a good product, I've ordered hundreds and hundreds of them for work (for the past 10 years or so we've been buying so much we just order directly from the manufacturer now since we order a container worth at a time). They have a good product at a reasonable price. Note that if you want the combination lock, be sure to specify that you want a real Emka lock and not one of the knockoff. I've had lots of problems with the knockoff locks messing up the combination and getting stuck, then you have to dissassemble them to manually realign the mechanism. The real Emka locks are fine. At your home though, I'd just get the standard (cheaper) key lock and not worry about it though. The combination locks are nice in a datacenter where everything has to be keyed differently for different customers, but at home it just costs more and makes things slower when you need to get in.

Charlie is right that your equipment will normally draw something less than maximum depending on what it's doing at any given time. Try to get an "80 Plus" compliant power supply for any computers/servers you have since those are more efficient at partial loads which will save you money, and, since this is GBA after all, more efficient = greener. Losses just mean more heat you have to get rid of anyway, since very nearly 100% of the power in watts that goes into your equipment comes right back out as heat.

BTW, while I'm not really a software guy, I do facility infrastructure and optical network design, I'd look into virtualization if you have several services running for your home. Virtualization lets you run multiple servers on one physical machine, which lets you use one server to a higher average utilization as a percentage of total capacity. Higher utilization means greater overall efficiency, which both saves money and is greener. That has been a HUGE move towards virtualization over the past decade or so, and it has done amazing things. We can design entire datacenters now to track the load on the servers, with the cooling systems, fans, chillers, EVERYTHING, ramping up and down with server load. Very cool stuff, and it really helps with facility efficiency. It also means higher power density for me to design around, but hey, there's always something :-) Overall, we can do more with less energy this way, which is a Good Thing for everyone. VMWare is proabably the biggest player in the field, but there are other ways to do it.

Bill

Thanks for all the advice, and the recommendation for the rack. I'll take a look at them for sure.

The server I built is designed specifically with virtualization in mind. Lots and lots of CPU cores, a modest 64GB of RAM (compared to the hosts I've built and operated in the past, which had TB of RAM), and four 8TB drives (the chassis has 15 3.5" bays, but I'll never use them all), which will be configured in a RAID 10 array so I still have performance, but my data is safe from drive failures. I still haven't settled on my baremetal hypervisor yet, but I'm between LXD and ESXi. The machine is basically an all-in-one, with compute power and storage all in one box, rather than needing a disk shelf or NAS. The power supply is a 500W 80-plus Platinum certified PSU, that's supposed to be about 92% efficiency at 50% load. Once I have it all setup, some time next week I should have a decent idea on idle and load power usage through iLO monitoring.

On top of running VM's, a good bit of what I do is containerized as well, using Docker and Kubernetes clusters, which converges more services into an even smaller footprint by sharing the host or "worker node" system kernel while allowing services to run fully independently of and isolated from each other. It's basically virtualization squared. Virtual machines running multiple services much in the same way that baremetal runs multiple virtual machines.

I used to be a sysadmin for multiple VMware ESXi and Citrix Xen environments. Now I work in the cloud, which is great, but much more expensive for the type of performance needed on some of these servers and services. Public cloud providers like AWS' pricing is designed for companies, not individuals. I do maintain a small environment in the cloud for testing, but the machines have about the same resources as a Raspberry Pi nano.

I was actually thinking about something similar to your PETG idea. I was thinking lift the rack off the floor to have an intake there, and use something like that to basically duct the intake air to the front using the space at the bottom of the rack and maybe the first 1-2u, so the cold air would be pulled in from the bottom and up the front, and the hot air would be exhausted out and up the back. I meant to start a drawing of it last night, but didn't get a moment of free time until almost 11pm. Hopefully I'll be able to find some time this weekend before it floats out of my head and into oblivion, as so many things do.

You won't get enough flow through the top and bottom of the rack, the path is too obstructed by equipment usually, and cables. You're much better off planning for "in the front, out the back" airflow with perforated front and rear doors. That's how all the datacenters do it these days. The "cold comes in the bottom of the rack" is a holdover from the old days when thermal densities were much lower. I know of only one facility in my area still doing that, and they also have grates in front of the racks now. Don't bother trying to lift the rack and get air in the bottom, it's not worth the effort, and won't work as well anyway.

Bill

Would it be possible to switch to a heat pump hot water heater for domestic hot water and duct the exhaust to feed into the equipment closet?

It's an idea I've been toying with myself when thinking about what to do with the cold air output that will come from a HPHW.

Your HWPH isn't going to produce enough cold air for this purpose. It wouldn't hurt anything to try this, it just won't be enough to provide 100% of the equipment's cooling needs. It's a clever idea to try to use some "waste cold air" though :-)

I often try to find ways to utilize the waste heat from the datacenters I work with. The problem is it's low-grade heat (heat that isn't very hot), so it's not very useful. My joke is that we need a commercial hotel towel drying company next door to blow our hot air to... About the best I've come up with is to use the waste heat to warm the parking lot so that we can avoid the need for plowing and salting in the winter. We just put in some poly pipe when the lot gets repaved, then circulate water from the hot side of the chiller through the pipes. It's easy enough to do, and it does something useful with waste heat that we'd otherwise be dumping into the cooling tower.

Bill

Interesting! (love the towel warmer idea btw)

I'm not by any means an expert on these things, but it's intriguing the inner geek in me and based on my rudimentary understanding of the science, isn't the entire point of a heat-pump to move heat-energy from "low-grade" sources of heat (like outdoor air in the winter which may only be 20-40 degrees) into more concentrated and useful forms of energy to heat air or water?

If instead of the exhaust of a heat pump, if you put the intake of a heat pump in the equipment closet (or datacenter), would that heat source potentially serve to more efficiently heat the building, or heat water for DHW or snow-melt purposes, or maybe to partially heat a pool or hot tub? etc.?

Yes, a heat pump moves heat. What happens is you use energy to "pump" heat from one place to another, usually against the natural temperature gradient (which means you go from cold to hot, instead of the natural direction of hot to cold). This is how air conditioners make your home colder in the summer than the outdoor temperature, and how a heat pump -- which is basically an air conditioner in reverse -- can scavenge heat from outdoors in the winter to make your house warm.

What happens in a datacenter is the "waste heat" is the heate made by the equipment. We use various types of cooling systems to move that heat away from the equipment so that we can maintain good operating temperatures for the equipment, which usually means around 70*F or so. What we're left with after all of that processing is huge amounts of slightly warm air or water, either of which are "transfer fluids" here in thermodynamics-speak.

Physics says that the lower the temperature differential, the harder it is to extract any useful energy from something. This means that our huge amounts of slightly warm waste heat can't do much in the way of being useful, so it's usually just vented to the outdoors (cooling tower, etc.). It IS energy though, and HUGE amounts of energy, so I've always tried to find ways to do something, anything, useful with it. I've thought "heat the office space", but the cost to install the equipment to do that is usually more than the amount of energy savings we'd get, so it doesn't make sense. That's because the temperature of the transfer fluid is often both too warm to be useful for additional cooling, but also too cool to heat the offices to a comfy temperature. That would mean we can use that waste heat to make a heat pump operate more efficiently, since we'd reduce the temperature delta it has to work against, which means it would take less energy to pump the number of BTUs we'd need, but we have to BUY the heat pump, then INSTALL the heat pump, and then pay to run it too. And we'd also have maintenance costs for that heat pump. When that all goes together, it ends up not making economic sense.

The one place we CAN make that work is with DX cooling systems, where we pipe the refrigerant loop into a coil in the office. This means the air conditioner in the data center is acting as a heat pump, pumping heat into the offices. With some three way valves, we can then modulate the flow of refrigerant and heat the offices in the winter, but pump the heat outdoors in the summer when we don't want extra heat in the offices. This works, because the phase change of the refrigerant releases heat in this case, and we can get enough hot-enough heat out that way. The problem is that we end up with pretty long refrigerant lines, which are expensive (these are usually 10 or 15 ton air conditioners, so the liquid side lines are usually 3/4" or so), which also means more $$ for refrigerant. We also have the additional complication of the maintenance contractors thinking "what IS this thing!?!", which usually means they charge more to work on it. More economic disincentive.

Thawing out parking lots is easy. We just have to be sure to NEVER stop moving the water through the lines. Cooling tower water doesn't have freeze inhibitors in it (they'd mess up the operation of the cooling tower), so if it stops moving, it can freeze. Since the datacenters have backup generators, this usually isn't a problem, we just put a few redundant pipes and don't worry about it. The pipe loops in the parking lot can be tapped right off of the cooling tower supply and return lines too, so it's easy to implement, and the transfer fluid is just water with some biocides in it so that we don't have issues with nasty things growing in the cooling tower. That's easier to implement, but it's only useful in the winter.

I'm always on a quest to improve the efficiency of these places, and I'm often contracted to do exactly that, which saves my customers money. Doing something useful with the waste heat is something I'd really like to be better able to do, but there just aren't many options, unfortunately.

Bill

Great explanation. Thank you for the super thorough and thoughtful response.

And your comments re: the extra cost and complexity of these types of solutions vs. the limited benefits really resonate.

Maxwell, an idea to recuse your general concept. After implementing whatever cooling approach works to keep the closet temperature from going too high, it will still be warm in there. If you then also duct the heat pump water heater from there, and optionally back to there, it will be working from higher temperature air than it would running in the basement. Thus, you will get more efficient water heating, even if you don't contribute that much to cooling the closet.

Do you put glycol in the water? It seems at current prices it's almost as much as the pipe. Or do you have some other way of keeping it from freezing?

You don’t generally run glycol in an evaporative cooling tower since glycol will mess up the functioning of the tower. Evaporative cooling towers work by evaporating water like an evaporative cooler to gain some energy efficiency. You trade water consumption for electrical power savings, basically.

Since the parking lot loop is tied in with the cooling tower on the “hot” side of the chiller, it doesn’t have glycol in it either. I suppose we could run a heat exchanger and then run glycol in an isolated loop, but that’s more equipment and I haven’t tried it that way. We don’t run glycol in the chiller water lines either since it reduces system efficiency and it isn’t needed — the chiller keeps the “cold” side at around 45*F, so no freeze protection is needed.

The parking lot loop just has to run the water all the time. The chiller puts heat into the loop, and that heat plus the moving water prevents freezing. If the circulation pump stopped, we’d lose the parking lot loop for the winter. The polyethylene pipe usually survives though, so when things thaw out we could restart the loop.

Glycol is used in closed systems with dry coolers (similar to a very big version of your car’s radiator) because those systems are exposed to freezing outdoors. In normal operation of the system, the system’s waste heat keeps things above freezing, but without glycol you risk freezing if the system shuts down in freezing weather.

Bill