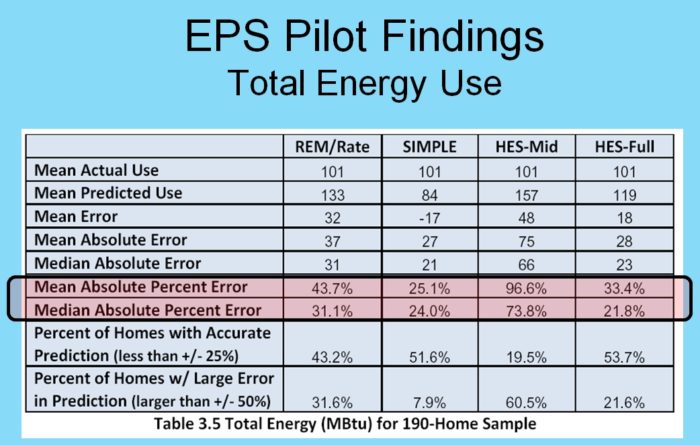

Image Credit: Table and graph from Michael Blasnik; window calculation formula from Bronwyn Barry

Energy consultants and auditors use energy modeling software for a variety of purposes, including rating the performance of an existing house, calculating the effect of energy retrofit measures, estimating the energy use of a new home, and determining the size of new heating and cooling equipment. According to most experts, the time and expense spent on energy modeling is an excellent investment, because it leads to better decisions than those made by contractors who use rules of thumb.

Yet Michael Blasnik, an energy consultant in Boston, has a surprisingly different take on energy modeling. According to Blasnik, most modeling programs aren’t very accurate, especially for older buildings. Unfortunately, existing models usually aren’t revised or improved, even when utility bills from existing houses reveal systematic errors in the models.

Most energy models require too many inputs, many of which don’t improve the accuracy of the model, and energy modeling often takes up time that would be better spent on more worthwhile activities. Blasnik presented data to support these conclusions on March 8, 2012, at the NESEA-sponsored Building Energy 12 conference in Boston.

Blasnik sees more data in a day than most raters do in a lifetime

Blasnik has worked as a consultant for utilities and energy-efficiency programs all over the country. “I bought one of the first blower doors on the market,” Blasnik said. “I’ve been trying to find out how to save energy in houses for about 30 years. I’ve spent a lot of time looking at energy bills, and comparing bills before and after retrofit work is done. I’ve looked at a lot of data. Retrofit programs are instructive, because they show how the models perform.”

According to Blasnik, most energy models do a poor job of predicting actual energy use, especially for older houses. And since…

Weekly Newsletter

Get building science and energy efficiency advice, plus special offers, in your inbox.

This article is only available to GBA Prime Members

Sign up for a free trial and get instant access to this article as well as GBA’s complete library of premium articles and construction details.

Start Free TrialAlready a member? Log in

78 Comments

30 years ago Bruce Brownell

30 years ago Bruce Brownell started designing and building homes that finally this article, Blasnik and others say we all should be building now.

Airtight

Continous insulation

Outsulate to keep the frame warm and minimize moisture issues

Less bumpouts

Insulate all 6 sides of a building continuously

Solar orientation

Use PV

Bruce Brownell Adirondack Alternative Energy

http://www.aaepassivesolar.com/

Congratulations Bruce for the success you have shared with us for so many years!

When will Energy Modeling be open source????

We need to get with the times and stop locking up useful tools!@! Michael, meet my challenge. Build a GBA sponsered FREE FOR ANYONE or at least PRO members spreadsheet energy modeling program.

The time is now for this site to have ENERGY MODELING.

Build and publish this GBA and you will see your readership DOUBLE!!!!!!!!!!!!!!!!!!

Dan.... are you paying attention my man.... Soup for you if you are!

I'm glad this topic was

I'm glad this topic was raised. I have always wondered if all the complexity of these super-programs was just an exercise in trivia; diddling w/ the small variables that really don't amount to much and can be hard, if not impossible, to model. Very interesting report.

What's the replacement?

I have done a lot of modeling over the years. My primary tool lately has been TREAT, although I use REM and EQUEST as well. Bottom line, I largely agree with Michael, modeling is not accurate enough to justify the time we spend with it and the decisions made from it.

Why is it so well accepted? Well, in the first place people like to make spending decisions based on facts, whether the facts are true or not, CYA. For efficiency programs modeling is often the basis of their justification to exist. And in either case if someone else's money is involved the need for due diligence must be met somehow.

The question remains, if we stop modeling (which wouldn't break my heart) how do we satisfy those needs? Do we need to do more accurate modeling or should we get people used to accepting a little uncertainty about ROI?

I don't think we will eliminate the demand for modeling for the CYA reasons mentioned above. It's too nice to have a number, any number, that someone spent a lot of time and energy to derive. I do think it would be great to break that dependency, I just don't think it will happen anytime soon.

Of course the other side of that is us, the modelers. It's a kind of satisfying geeky thing to do. And it gives us an answer, and we like that too. So there is a lot of work to do to break this co-dependency, thanks Michael (and Martin) for putting this out there.

And why are we doing all this in the first place?

I totally appreciate this article. As a person who works for a company which pays me to help homeowners (remodel/add on/simply improve) their existing homes, my employer and I are fully aware that improving the efficiency of a house is just one on a long list of things the average person might potentially want to do to their house, assuming they can afford to do anything. And as a green remodeler, energy efficiency must be weighed with equal respect to resource efficiency, longevity, and health.

I hate to say it, but efficiency improvement is on the "non-sexy" list almost all time. Perhaps that's why window replacement companies have more success than the energy auditors and insulators. At least one can "touch and feel" their new windows (wink, wink), even if they may never recoup the cost of them.

Moreover, how many quadratic equations "should" it take to persuade a person that caulking that window may save them money in the long run. Sometimes the answer is in the form of a different question.

Matt

Alternatives to Modeling

We could always go back to this marketing pitch:

"_______ (fill in the blank) will save you (30%, 40%, 50%) on your energy bills."

Every prediction of energy savings is based on some kind of calculation. Modeling with an interactive UA calculation algorithm that considers interactive measures, and is based on tons of research, has broader credibility than some contractor's spreadsheet where we don't know what the calculations are based on.

Though it may not be spot-on, modeling allows many incentive programs a basis for providing much-needed funding at time of retrofit, rather than waiting a year for energy bills to prove the homeowner is eligible for the incentive. Incidentally Suzanne Shelton of The Shelton Group presented research at ACI National this week that 64% of homeowners who did 1-3 energy improvements ended up using more energy. Customers who received retrofits felt they did not have to be as careful with consumption afterward.

Inaccurate assumptions of the efficiency of existing features indicates there is insufficient guidance for software users in how to evaluate these things. Perhaps software providers, and the industry, could improve guidance on the evaluation of older systems.

Manual J is an energy model too, and though it also has its critics it seems to be generally accepted as the best method we have for sizing HVAC systems. I don't think we want to replace it with the method where you stand on the curb across the street and hold up the cardboard cutout to see what size unit the house needs.

modeling is just one of many factors...

Thank you for writing this article, Martin. Finally, the truth about energy modeling. Although I rely on modeling tools in my new construction work, especially for design loads, the results are only a starting point, one input among several factors that inform my design recommendations.

Only by understanding the limitations and weaknesses of a tool can we properly interpret the results. This type of judgment is not something that can be learned in class or a book, but rather through experience, curiosity, and feedback.

Efficiency programs that rely solely on modeling results are deluding themselves. For this reason, I did not support Home Star, and do not support the proposed 2012 HOMES Act.

I do take issue with your characterization of not needing to use test equipment when sealing ducts. While I agree in the case of duct leakage testing, it's important to test system airflow. Otherwise, a newly tightened duct system could cause airflow to drop below acceptable limits, even leading to coil freeze-up. Manclark was one of the first to write about this in the May 2001 edition of Home Energy Magazine. Fortunately, airflow testing doesn't take as long as prepping for a duct leakage test, but it does require significant training.

Response to Joe Blowe

Joe,

You reported that "64% of homeowners who did 1-3 energy improvements ended up using more energy." Now that's an interesting finding!

You wrote, "Inaccurate assumptions of the efficiency of existing features indicates there is insufficient guidance for software users in how to evaluate these things." Possibly -- but I tend to side with Blasnik in his conclusion that these types of systematic errors are due to bad software algorithms and bad default assumptions. If the software developers bothered to compare their models to the utility bills of occupied homes, then the software could be improved and corrected.

You wrote, "Manual J is an energy model too, and though it also has its critics it seems to be generally accepted as the best method we have for sizing HVAC systems. I don't think we want to replace it with the method where you stand on the curb across the street and hold up the cardboard cutout to see what size unit the house needs." Actually, Blasnik made a cogent argument to the effect that rules of thumb make more sense than Manual J calculations.

I hope that Michael will comment here on the Manual J question, but here are some notes I made of his opinions on Manual J. "It's bizarre how Manual J is treated as if it had been carved in a stone tablet, when actually it is a really crude and simplified model. It has all these fudge factors. And this precision doesn't really matter, because they don’t make heating and cooling equipment in all these increments. If you are sizing residential cooling equipment, there are only 5 possible answers. Bruce Manclark has worked on developing a simplified version of Manual J and Manual D, with fewer inputs. For 90% of most AC installations, sizing per square foot actually works pretty well. We just need better rules of thumb."

Response to David Butler

David,

You point about testing system airflow is an interesting one; like many writers on energy topics, I have been advising people of the importance of testing system airflow for many years. I'm interested in hearing Michael Blasnik's response to your concern that sealing duct leaks without testing system airflow might lead to problems.

Regarding the PHPP

While a simple energy efficiency retrofit may not merit a rigorous analysis – a gut renovation and new construction should.

Wolfgang Feist has recently tweeted, “results are in - consumption in Passive Houses really as low as predicted - to be presented at the conference http://j.mp/s31kZr”

I encourage everyone to attend the Passive House conference in Hanover Germany in May, and/or seek out the presentation online when made available.

An essential element of Passive House and the utilization of the PHPP is not to reduce energy use for heating and cooling for the sake of it, but as a serious attempt to help mitigate the worst effects of climate change. Given a retrofit or new building project, achieving anything less than what we know is readily achievable via the Passive House standard, should be seen as a lost opportunity in our climate mitigation efforts. The PHPP allows us to predictably make the most of our mitigation efforts.

So, if a fundamental goal in optimization is, as it is with Passive House, an approximate 90% reduction in heating and cooling loads, allowing for a proportional reduction in a heating/cooling plant – one needs to be confident in the numbers! At 4.75 kbtus/sf/hr (the PH standard) a 25% swing is only 1.2 kbtus. A typical Energy Star Home may have a heat demand of say, 65 kbtus/sf/yr, no? making a 25% swing for the Energy Star Home16 kbtus - or over three times the entire PH demand. So if one is looking for Passive House optimization, the PHPP is required. It is not a luxury.

Thermal bridging is the elephant in the room. HERS, Energy Star may address thermal bridging but unless the bridging loses are actually calculated and put in to the model – the model is destined to be 20% off from the get go. The most pernicious thermal bridge, the installed window frame, is only seriously addressed by the Passive House program, which essentially requires the overinsulation of all window and door frames – and not coincidentally, addressing a common problem spot of condensation issues. Without serious attention to thermal bridging calculations – the models will never be great and can be most problematic for Passive House level construction.

It seems to me, the 4.75 kbtu/sf/yr threshold is too often a distraction. Yes, if one wants to identify a building as a CERTIFIED PASSIVE HOUSE, there are very strict definitional requirements. You hit it or you don’t, you are in or out, clean and clear.

But Certification should be seen as a key quality control and value added component. The PHPP is complex and the numbers matter. The Certification process allows for proper checking of component values and system integration - by the PHI accredited certifier and PHI itself. Practitioners learn a great deal in the Certification back-and-forth process, and so Certification is highly recommended, especially for a practitioner's first few projects.

We need to be clear as well that the 4.75kbtu annual heat demand and 0.60 airtightness are the MINIMUM requirements for Certification, the goals are really a third and half again better respectively. If you can't hit the minimum requirements for Certification in a new building PHI doesn't think you're really trying all that hard.

But if you don’t want the certification, and/or specific project constraints make hitting the strict goals unattainable, YET the project uses Passive House components and uses the PHPP and the methodology etc… one can legitimately call the project a Passive House Project – it is just not a certified project and PHI will not vouch for its performance as such. If instead of 4.75 kbtus/sf/yr your building is at 6 or 7 kbtus/sf/yr, you still likely have an awesomely efficient building - just not one that can be identified as a Certified Passive House.

Yes, plug loads are a problem, not just in energy use but in proper functioning of the systems, particularly at Passive House levels, and must be addressed. But they hardly seem an excuse to not make the basic building enclosure as good as the PHPP can help you make it.

Regarding the complexity and training required for the PHPP – for the uninitiated it is a nightmare to be sure. But with the investment in training and the first few buildings, one typically sees the hours required to complete the PHPP dramatically drop and become quite speedy and manageable. If one is interested in training in PHPP or the all important thermal bridge calculations I recommend you contact the Passive House Academy (www.passivehouseacademy.com) . I’ve taken their thermal bridging class and it is amazing – top notch instruction. And there are growing numbers of Certified Passive House Consultants (CPHCs), who are happy to help you with the calculations. Regional groups such as Passive House Maine, Passive House New England, Passive House New York, Passive House Northwest and Passive House California can put you in touch with them.

Response to Ken Levenson

Ken,

I'll do my best to respond to some of your comments, but because you raised so many points, I may be unable to respond to everything in depth.

Lowering a building's space heating needs to Passivhaus levels is a worthwhile goal, but I disagree with the assertion that it matters very much if you miss the target. As my recent article on occupant behavior shows, building a Passivhaus doesn't guarantee low energy bills. The occupants can still use too much energy, even in a Passivhaus.

You wrote, "HERS, Energy Star may address thermal bridging but unless the bridging loses are actually calculated and put in to the model – the model is destined to be 20% off from the get go." So what? Much bigger swings than 20% of the heat requirement of a Passivhaus are possible due to thermostat settings, domestic hot water usage, or electronic appliance usage. Energy modeling precision is a myth.

I disagree with your assertion that "the Passive House program ... essentially requires the overinsulation of all window and door frames." I've reported on many Passivhaus buildings over the years, and visited several, and many of them have achieved the Passivhaus standard without overinsulating the window frames on the exterior. However, anyone who wants to save a few more BTUs a year is free to overinsulate their exterior window frames if they want -- it's a good technique for some window types, as long as the cost to execute this detail are proportional to the (tiny) savings.

Mitigating the effects of greenhouse gas emissions and addressing climate change is, indeed, a noble goal and a huge challenge. But building more new single-family homes in the U.S. (Passivhaus or not) is not the solution. The solution includes the elimination of coal-burning power plants, a radical rethinking of our transportation infrastructure, a series of cost-effective retrofit measures on existing buildings, and steep new taxes on fossil fuels.

There is no way we will build our way out of the current climate crisis with Passivhaus contruction methods.

Response to Ken Levenson

While I think all of us agree that dramatic reductions in residential heating and cooling loads are both possible and important in the fight against global warming I'd suggest we be alert to the tunnel vision that can result from an entirely singular focus in that struggle. I beat the gong once again for the importance of opportunity cost within a more complex overall calculus: assuming a zero-sum total financial resource we might for example consider it more important to spend rather more on an in-town lot to increase walkability and reduce vehicle emissions and rather less on massive insulation, especially in more moderate climates. According to the perspective introduced here, "what we know is readily achievable" - in that more integrated view - may be better aimed at a level which does not require complex calculation.

This perspective also allows the very real advantage that many more designers, builders and, most important, homeowners may be brought into the fold. To pluck numbers out of the air in a vastly imprecise but possibly somewhat accurate estimate of the potential, 75% reduction in the energy use of 50% of the housing stock is going to do vastly more good than a 90% reduction for the favored few who can provide the resources to incur PH's substantial cost. For those of us who choose or are compelled to work in that less rarefied air simple but reliable rules of thumb are an essential part of the toolkit. Thanks Martin for this validation.

response to Martin and James

Clearly agreed - building efficiency is but one tool in the box for mitigating climate change. World War II proportion effort is required, demonstrated, among other places by climate wedges here:

http://thinkprogress.org/romm/2011/09/30/333435/socolow-wedges-clean-energy-deployment/

We can't use the excuse of other required efforts (such as more multi-family and better urban planning) to dismiss what is reasonably possible in building efficiency alone.

Passive House is approaching cost parity in Europe and with growing adoption in US there is no reason to think it can't here too.

I quite agree, and stated so, Martin: That hitting the exact number only matters if you want Certification. Ideally we should be trying to do better than the requirement but coming up short is not a mortal sin.

When the loads get tiny the thermal bridging matters a great deal.

I must admit that the general protestations against the rigors of Passive House, to me, echo those against the rigors of Japanese automobiles in the 1970s.....and we know how that turned out.

Wolfgang Feist seems to be disagreeing about the predictability of the PHPP. It will be interesting to see the presentation.

Enjoying the dialog.

Start with energy bills

In planning for an energy retrofit for our house in Minneapolis energy bills for the previous 15 months were obtained from the utility provider. I used an Excel spreadsheet with15 inputs and assigned square footages and U values for the building elements of the house. The heat loss calculations were done manually using heating degree days, this was compared to

normalized energy use from the utility billing data.

With this information a retrofit priority list was made, air sealing, attic insulation, foundation insulation and a 95% furnace were the 4 improvements made. The envelope improvements were made first and the old furnace was used for an additional heating season. With this information I sized the new furnace based on normalized energy use and assumed efficiency of the old (1978) forced air furnace @ 65%.

I modeled the post retrofit house with both Rem Design and Energy 10 and found them to be in agreement as to the predicted energy use. The actual energy use was very close to the models, within 5%. The house pre retrofit used 4.06 Btu/sf/hdd and the post retrofit usage is as follows,

2007 2.1 Btu/sf/hdd

2008 2.27

2009 2.4

2010 2.38

2011 2.28

We setback twice daily in 2007, at night and then during the weekday, this may account for the lower energy consumption for that year.

I believe energy models can be used quite accurately on energy efficient homes, this seemed to be true when modeling superinsulated homes in the 1980's. The energy modeling software was not very sophisticated back then, maybe that's why it worked.

Another response to Ken Levenson

Ken,

You wrote, "I must admit that the general protestations against the rigors of Passive House, to me, echo those against the rigors of Japanese automobiles in the 1970s."

I'm not protesting against the program's rigors; I'm just questioning whether the hours spent modeling are hours well spent. I'm also questioning whether the high cost of some new construction measures can be justified by the anticipated energy savings.

You wrote, "Wolfgang Feist seems to be disagreeing about the predictability of the PHPP."

I'm happy to stipulate that it's possible that energy monitoring data from 1,000 buildings in Europe may show that the average energy use data correlates well with PHPP projections. That doesn't change the fact that: (a) there will still be a bell curve distribution, with low-use households on one end and high-use households on the other, and that (b) domestic hot water use and plug loads are the most important factors explaining this bell curve.

Finally, I suspect that monitoring of Passivhaus buildings in the U.S. will likely reveal that, on average, U.S. families use more domestic hot water and have higher plug loads than the default PHPP values developed for German families.

Response to Ken

Actually there are couple of reasons why that's unlikely to happen here, the typically much greater size and complexity of US homes for one and the much lower price of energy for another being barriers to widespread adoption. But I assume that your comment is focused on new construction which with the current huge inventory of unsold homes is hardly the greatest of our problems right now, and no way is 'cost parity' a consideration with renovation of existing homes. Getting clients to spend 20K - 40K out of a 200K renovation budget on energy upgrades is a struggle, a 100k deep energy retrofit is next to impossible, and the jump beyond that to a PH level upgrade is simply a bridge too far for all but the very rarest of energy-focused and deep-pocketed homeowners.

By the way, I've been telling my clients for two decades that energy prices are bound to rise dramatically sooner or later and they should regard ROI in that context. This is beginning to get old for all of us I think and my spiel has changed. Forget about ROI - the reason for including energy upgrades in your budget is simply because it's the right thing to do. What, after all, is the ROI on all the other discretionary costs in home construction, whether it's trendy recycled glass countertops or sustainably harvested cork and bamboo floors?

in response to James

I quite agree regarding ROI as far as the "selling" goes... I've ask clients: what was the ROI on your vacation to Mexico? or how about the fancy restaurant dinner? Or your nice car? So I don't even bother selling Passive House based on ROI but based on comfort and health.....and yeah, by the way...it will pay for itself, and you'll be doing good for your kids and grandkids. It becomes rather silly not to do it, imho - because at that point all that is stopping them is the desire for a wood burning fireplace and high output gas stove.... And yes, I'm talking about first adopters with relatively more money to spend.....they are the first adopters after all. But will greater numbers, the costs will go down and the client base will broaden significantly. Habitat for Humanity is one of the biggest home builders in America and seems to be significantly moving in the Passive House direction.

Regarding renovation ....it assumes only that a renovation is going to be a gut renovation to begin with.

As for new construction, there will be something on the order of 700,000 new housing unit starts this year.....plenty of units to consider the implications of 25% better vs. 75% etc....and their use over the next 50 years.

in response to Martin

Martin,

Life is a bell curve - it is not a disqualifying characteristic. And the idea that the outliers in the PHPP bell curve are likely driven by plug loads and DHW only reinforces the validity of the PHPP to accurately predict the performance of the modeled enclosure. No?

And agreed, the plug load and DHW assumptions are off for US usage. Regarding both, there is no magic.....to get the most accurate results one should adjust values from default numbers to reflect likely usage. And for properly sizing various systems, it is important to do so.

Ken, wrong; life is not a bell curve

Much that is studied is actually NOT, on a bell curve...

Study a bit more thoroughly... Ken

aj builder.....you're kidding, right?

My comment regarding life as a bell curve was a gross over generalization...I thought, obviously enough.

Wikipedia on bell curves:

But I appreciate your concern for my mathematical education - I'll take it up with my statistician father-in-law. All best. ;)

Response to Ken

Ken,

I don't quite see how you can sell your clients on Passivhaus based on "comfort and health.....and yeah, by the way...it will pay for itself." I think that it's perfectly possible to build an extremely comfortable, healthy house without meeting the Passivhaus standard -- and I think than it many climates in the U.S., the level of insulation required to achieve the Passivhaus standard will never pay for itself.

I never said that "outliers in the PHPP bell curve are likely driven by plug loads." I'm saying the families in the dead center of the bell curve will have energy use profiles that are driven by plug loads.

If you agree that "the plug load and DHW assumptions are off for US usage," your statement implies that the oft-repeated claim that a Passivhaus will use only 10% of the energy of a "normal" house is untrue. Higher domestic hot water use and higher plug loads (typically of North American families) make that statement highly unlikely.

Ken, I agree with you that "life is a bell curve" -- I knew what you meant. But what we all need to think about (when we consider the two families I profiled in an earlier blog, the low-use family who lived in a net-zero house and the high-use family living in a Passivhaus -- you'll remember that the Passivhaus family used 7 times as much electricity as the net-zero family) is: Which levers will be most effective at moving the bell curve to the left?

in response to Martin

The 90% reduction claim applies only to heating and cooling annual demand - a fundamental distinction.

If the occupants "behave" one might expect an overall 75% energy usage drop.

Because as designers we can "control" the enclosure and basic systems, as opposed to the plug loads and how long a shower the occupants take, I'm inclined to apply the most rigor to the enclosure, and then with more efficient electronics and hot water heating systems, and more conscientious habits we can drive down the others.

(btw: i still "yell" at my wife to turn out the lights when she leaves a room......tough habits to break.)

Response to Ken

Ken,

You wrote, "The 90% reduction claim applies only to heating and cooling annual demand."

Oh, if only you were right! Sadly, you're wrong. Passivhaus advocates often repeat the falsehood that a Passivhaus building uses 90% less energy than a "normal" house.

I found these examples on the Web in no time at all:

Passive House DC: "Passive Houses save 90% of household energy."

(http://passivehouse.greenhaus.org/)

Passive House Alliance: "Passive House: no boiler, no furnace, highest comfort and up to 90% less energy."

(http://www.phmn.org/?page_id=2)

One Sky Homes: "Reduce energy consumption by 90% and enjoy amazing comfort with a Passive House."

(http://oneskyhomes.com/content/passive-house)

Passive House and Home: "A passive house is 90% more efficient than a standard house."

(http://www.passivehouseandhome.com/)

TE Studio: "Passive House aims to reduce energy in buildings by up to 90% while providing superior comfort and indoor environmental quality."

(http://testudio.com/services/passive-house/)

Clarum Homes: "A passive home is an extremely comfortable, healthy, economical, and sustainable home, designed and constructed to use up to 90% less energy than a traditional home."

(http://www.clarum.com/resources/passive/)

Sadly, such exaggerations are the rule, not the exception.

response to Martin

Yes, I completely agree that there is a communication problem. Every time I see such a claim - I want to insert (space heating and cooling) in front of "energy".

However if you look at the standard, 4.75kbtus/sf/yr space heating demand translates into roughly a 90% reduction from "typical heating demand" in heating climates. So I'm right so far as what the standard actually is supposed to achieve. and another statistical average. ;)

To get it straight from the Germans, see here:

http://www.passipedia.org/passipedia_en/basics/energy_efficiency_-_the_key_to_future_energy_supply

I should add that PHI has been inconsistent in their explicitness....as I see right above my quote link a reference only to energy and not heating....their inconsistency has led others to overstate it would seem.....

One other basis for the exaggeration

Ken,

The other way that this exaggeration overstates the case for Passivhaus is that it compares a Passivhaus building to "ordinary existing buildings in Germany" rather than code-minimum buildings. Obviously, Germany has a lot of very old buildings, many of which are hundreds of years old, including lots of buildings with uninsulated walls.

In the U.S., residential energy codes have been ramping up. A more realistic comparison for new buildings in the U.S. is to compare a Passivhaus builiding with a code-minimum U.S. building, not an "existing building in Germany."

regarding PH vs. code minimum

Fair. But the implication as far as I've understood it, has only been relative to existing building stock.

I'll find out info regarding PH vs. code minimum and report back.

On a side but related note: while ROI is always a factor and part of the equation to ensure affordability, the goal of the claim, as I see it, is one geared not toward the economics per se, but our efforts toward the mitigation of global warming.

Couldn't agree more. New Idea?

This article may be just what I need to hear to change the way I do energy audits. I've been a remodeler my whole life and doing energy retrofits full time for 3 years now and have been frustrated with energy models for 2 of those years. I figured out right away they rarely agree with the UT bills and I usually find myself figuring out the problems and talking to the customer too much and not having time to collect all the data. I'm all for measuring results but modeling is wearing thin.

Also (because I'm not a computer programer), I'd like to advocate for a new kind of software for existing homes (maybe it exists) that scraps the model and uses simple parameters like square footage, number of stories, foundation type to predict saving based on the averages of actual measured savings of a given improvement on similar houses. I'm talking huge database that is continuously updating. I'd pay for a subscription. I can't be the first person to have thought of this can I??? Some UT bill disaggregation maybe necessary to pull out occupant behavior but I can't think of anything more simple and more accurate for retrofits. Needless to say I'm compiling my own.

As for PHPP, I love the concept but have often mused of a Passivhaus founder having to come up with a budget retrofit strategy for an existing home in my area. With all due respect, I think you'd come back in an hour and find them under the table rocking back and forth muttering to themselves.

Andy, great post.Ken.... no

Andy, great post.

Ken.... no soup for you. You might want to relay this thread to your father in law so he might be able to explain how often life... is not... anything to do with... a bell curve. And nagging your wife...? OK... And not getting the gist of this blog? PH is a great concept... but... Martin is telling it like it is Ken.

No soup for me either... just to be fair... and please don't continue with me... thank you... have a nice day my friend.

Duct sealing and air flow.

Read some posts and had to comment on this because because I've had a problem with this. I sealed some ducts in a crawl and the furnace started high limiting regularly. System airflow is important to the furnace operating properly and should be tested. Tell me if I'm wrong but this should be as simple as an acceptable range of total external static pressure for a given model of furnace (note that I'm not talking about system balancing for comfort just the furnace operating properly).

comments, clarifications and replies

Sorry I'm late to the party here and this comment is so lengthy, but there was a lot to cover.

Martin- I think you did a very good job of trying to summarize the wide range of items I covered in the talk, but I'd still like to clarify a few things and reply to some questions:

1) I think current modeling tools work pretty well in modern and fairly efficient homes and can work pretty well in older homes -- if you make some tweaks to a few things like R values and infiltration and duct models. I wouldn't want anyone to think that I'm claiming that my simple model has some type of magic fairy dust driving it -- it's just a matter of adjusting some default values and assumptions and tweaking a few algorithms and focusing on getting the big things right.

2) I'm not against energy modeling -- I'm only against overly elaborate modeling efforts that involve spending a lot of time trying to model things that either don't matter or can't be modeled well. For energy retrofits, modeling tools should be quick and easy to use and only ask the user for input about things that matter. The model should be a tool to help the auditor -- not the other way around ;} For new construction or large or unusual projects, more complex modeling may be more justifiable.

3) Manual J is a fairly crude model and simpler methods can work as well. The relatively limited number of sizes for available heating and cooling equipment, the very small energy penalties from oversizing modern HVAC equipment, the desire for pick up capacity, and the advent of modulating equipment are all making the specific equipment size less important than ever. The biggest benefit of "right" sizing may be the increased likelihood that the ducts will be big enough. Manual D is more important than Manual J. None of this should be interpreted to mean that I think oversizng by 400% is OK.

4) Duct testing -- my comments were about duct blaster testing. It is the time and hassle of taping the registers and attaching the duct blaster that make it not a cost-effective test in my opinion -- especially given the effectiveness of pressure pans in finding leaks if you have a blower door with you to address shell leakage. Air flow reduction from duct sealing can be a problem, but you can track the impacts by measuring static pressures.

5) I've seen many people claim the supernatural performance of PHPP but the data I've seen (and common sense) don't support many of these claims. Sure, houses with R-40 walls and R-60 attics and U-0.1 windows will use very litte heating energy and so absolute usage errors will tend to be small. But even for heating use -- how much a given super insulated home uses may be more about the type of TV they buy or whether they have a large dog than whether the slab has 4 inches or 6 inches of foam under it. In addition, if thermal flaws at window details really matter it still doesn't make sense to me that every home would need a custom analysis -- can't you learn from prior homes? I really can't even follow the logic for most of Ken Levenson's arguments and agree with Martin on nearly every point. 90% savings? certainly not compared to most new homes.

6) I also find the arguments about why ROI doesn't matter to be quite weak. The ROI for my vacation is more than 100% or I wouldn't have done it -- the enjoyment was worth the cost. What enjoyment do you get from thicker walls or lower U value windows. Comfort? you can get plenty comfortable without going to PH levels. Energy features with poor financial ROI likely have poor climate change ROI too -- especially when you consider opportunity cost. I'd rather see the resources that go into imported super windows and super HRVs and extra foam under slabs go into retrofitting some of the thousands (millions?) of uninsulated homes that many low and moderate income households live in across the US. If you really want to have a big impact on climate change you could build a house to BSC level (5/10/20/40/60) and then take the extra money saved and donate it to retrofit a few low income homes in the area.

/off-soap-box

Response to Michael Blasnik

Michael,

Thanks for your detailed comments -- very helpful.

And it's perfectly OK to climb on a soap box now and then. I strongly agree with your point that "Energy features with poor financial return on investment likely have poor climate change return on investment too -- especially when you consider opportunity cost."

IMO: Energy Models Aren't Intended to Predict Usage for a House

Occupants are utterly unpredictable. Build quality is fairly unpredictable. So prediction has always been a loser's game. I think that's the main point here.

What, then, are models good for?

They can be useful for comparing a variable, one at a time.

Example: How much (if any) will I save per year using high SHGC low e windows vs. standard low e windows ?

OR, How much will I save per year with R28 wall insulation vs. R19?

But even then your answer is tempered by: "assuming this set of standard conditions".

So you build a few models, make a few changes, note the results, then stick your modeler back in the drawer. Drag it back out when the technology or costs change significantly.

Response to Kevin Dickson

Kevin,

I understand your point, and it's valid, with just one quibble: Blasnik has found entirely different reasons to explain why some energy models give the wrong answers.

It's not because occupants are unpredictable. And it's not because build quality is unpredictable.

Blasnik has looked at the performance of energy models for thousands of houses, and the models are wrong in systematic ways. That's why he knows that these problems aren't due to the unpredictable behavior of occupants or variations in build quality.

The reasons for the systematic errors discovered by Blasnik are given in the article; they include bad default assumptions and bad algorithms. These are problems with the models themselves, not problems with the occupants or builders.

One of the problems cited by Blasnik -- a bad default R-value for single-pane windows -- can potentially throw off an energy model used for one of the purposes you suggest (determining savings attributable to glazing specifications).

Value of Energy Modeling

I think a good diagnostician is worth her/his weight in gold. Others may benefit from a lot of testing and modeling.

Diaggregating vs modeling

I do light to moderate energy retrofits in north Florida. Generally the sole fuel is electricity but occasionally I run across some wayward soul with a center flue gas fired storage water heater fueled with $4 per gallon propane (gas is cheaper, right!?!?)

I don't use any energy modeling software, but i push hard to obtain at least 12 months past energy bills. Then I work on disaggregating them.

I organize my analysis around a home's six or more Energy Centers, to include:

1) Heating and cooling - identify an approximate proportion of total energy use attributable to HVAC, typically 30-40%. Assess present system, run a Manual J, room by room. Measure room supply airflows, compare to client complaints about hot and cold rooms - every home seems to have at least one problem room. Discuss system sizing and humidity control.

If present system is aged and inefficient, calculate and advise if a cost-feasible combination of building enevelope upgrades (infiltration sealing and insulation) would allow for a smaller, high efficiency variable capacity replacement system. Using Man J and equivalent full load hours, conservatively calculate likely annual savings from system upgrade alternatives.

2) Water heating - default here is a storage electric water heater, generally in the garage. For homes with 3 or more full time residents, a heat pump water heater is generally a no-brainer. Homes housing 4-5 people may additionally or alternatively benefit from a refrigerant desuperheater recovering waste heat from the central air and parking it in a preheat tank plumbed upstream of any conventional water heater, including heat pump units.

If a client is in doubt or otherwise desires to refine water heating cost it is a simple (less than 10 minutes / $50) matter to temporarily connect hourmeters to a storage electric water heater's elements...wait a week and crunch the results, adjusting as necessary for present vs year round average cold water inlet temperatures.

Homes with 6+ full time residents MAY benefit from a solar thermal domestic water heating system, but their high first cost, complicated installation hobble their effectiveness and ROI when all is said and done...the recent article "solar thermal is dead" loudly resonates with my experience and data.

3) Laundry - ask number of loads per week, note type of washer (front or top load), water temperatures selected. Determine if dryer has a moisture sensor, whether client selects automatic drying cycle. Also assess dryer exhaust path for airflow and lint blockage.

4) Media - question client as to number and types of computers and TVs, daily hours of operation. Connect a Kill-A-Watt to main media power strip to quantify load if circumstances indicate.

5) Kitchen - assess average cooking intensity, fuel and frequency. Examine range venting system for IAQ and humidity removal efficacy.

6) Lighting - Assess percentage of fixtures utilizing high efficiency lighting (CFL or LED) Inform that incandescent fixtures are both inefficient source of light and add significant cooling load. Ask client to list lamps and average daily hours of use.

7) If a pool is present determine filter and other pump horsepower, daily hours of operation. measure motor amperage, calculate power consumption.

8) Assess other significant loads - out buildings, water features, hot tubs, irrigation pumps, etc.

Armed with all that I can confidently suggest energy improvements and predict with reasonable accuracy their effect on energy conservation and operating cost.

If circumstances dictate, I temporarily deploy a multi-channel Energy Detective to gather and present consumption data for multiple significant loads in the home.

My point is that accurately disaggregating actual client utility costs trumps modeling software.

duct sealing and lower air flow

In general duct sealing only does not seem to decrease air handler airflow. Fixing disconnects, ducting building cavities, and fixing holes bigger than your head sure can,. Measuring pre and post static pressures is good idea, although I find a flow plate test faster and less variable. If I didnt own a flow plate, I would do pre and post statics

Bruce

Homeowner perspective

I own a Mini home (Trailer) 16 ft by 70 ft, built in 1992. My concern is total energy cost per year. I had a blower test done in 2007 and the ACH at 50 Pascals was 6.9. Without any detailed modeling we started air sealing. Shotgunning the entire house we reduced that to 3.1ACH. We installed a mini split heat pump at a cost of $4200 instead of the recommended window replacement that would have cost $5000.ROI was done on a calculator and the savings were better with the heat pump. The house is all electric, and my base load per month is 500Kwhrs.If I take the baseload off my bill, I will have used about 3000 Kwhrs to heat my house this winter, at a cost of $295.50.

Going to attack the DHW next. We use an average 7.3 KWhrs a day on hot water, but 1.7Kwhrs of that is heat loss from the tank. When the tank needs replacing I will get a tank that loses 1.34 KW a day and enlose it with a preheat tank. The preheat tank will never get up to the house ambiant temperature so it will recover the loss from the electric water heater. That will reduce our DHW to 5.6 KWhrs a day $58 dollars a year saved for an incremental cost of $500. If the enclosure is relatively air tight it should reduce the sweating on the preheat tank.

I realise that the 1.7 KWhrs will have to be replaced by the heat pump at .567 KWhrs a day, but I also will gain that in heat flow to the DHW from the house in the summer.

Any suggestions?

not ready to bail out of the modeling business yet

completely fascinating article. in the case of retrofits, it seems that the greatest errors derive from over-estimating pre-retrofit energy use. However, it is easy to compare the estimate with actual use.

In general, I'm reluctant to accept the conclusion that energy modeling is a waste of energy. just because present systems may be inaccurate is not sufficient reason to neglect the concept--it should motivate us to improve our chops.

If that is a valid pursuit, then it addresses another of the author's issues--the variance of owner behavior. If we can develop and adopt a good modeling system, then it puts owner behavior into a rational context. A reliable system would quickly inform Harry and Harriet Homeowner what the impact of their four tv sets actually has on their utility bill.

Energy Efficient Homes v.s. Occupant Behavior

Thanks, Martin. (hi, Michael)

Great topic and good write up of it.

Having delved into this topic deeply and as the author of the Energy Trust of Oregon / Earth Advantage study you linked to in the article, I can say I was shocked by how poorly modeling software works. That said, I do think there is a time and a place for modeling. All things being equal, as in comparing different designs of the same (new) home, modeling can help one find the relative value of thicker insulation, versus better windows, versus a tighter envelop, etc. I still use PHPP, of all things!!

I wanted to point out one thing that seemed to get confused at times in the article and comment thread. If you are measuring the efficiency of a building, you quickly get lost in the woods if you add in occupant behavior. If you want to model occupant behavior (as I think Michael would agree) the best way is to look at their previous utility bills, not model the house. Yes, people will use more or less energy in a house and the number look very different. But keep clear about what you are measuring and why. If you want to compare the efficiency of one house to another, you better normalize for occupancy behavior. If you want to help people use less energy, talk with the people, teach them some new habits (good luck), and let them know about some more efficient options for the things they plug in. Pages 50-53 and 60 in the study mentioned go into this in more detail.

Starting on page 53, I talked about what we thought was reasonable and possible for the accuracy of a modeling software program. Michael’s SIMPLE was close, and we thought it could be developed to get there. It also strongly made the point you mention in the article, that more input doesn’t make it more accurate, so you might as well keep it simple and save everyone a lot of time and effort.

Thanks.

Response to Bruce Manclark

Bruce,

Thanks for your comment on duct sealing and airflow -- much appreciated. And thanks for all your good work in the Pacific Northwest.

Response to Roger Williams

Roger,

It sounds like you've been making good energy retrofit choices, using a step-by-step, logical approach.

Response to Alan Abrams

Alan,

I agree that energy modeling can be very useful, as long as we choose good modeling tools. Michael Blasnik has shown the potential of improved tools by developing his Simple spreadsheet.

One of the goals you mentioned -- "a reliable system [that] would quickly inform Harry and Harriet Homeowner what the impact of their four TV sets actually has on their utility bill" -- does not require energy modeling software. All it requires is a real-time electricity use meter with a living room display. These devices exist; for more information, see Home Dashboards Help to Reduce Energy Use.

Response to Eric Storm

Eric,

Thanks for your comments, and for your excellent report.

I completely agree with your important point: "If you are measuring the efficiency of a building, you quickly get lost in the woods if you add in occupant behavior. If you want to model occupant behavior (as I think Michael would agree), the best way is to look at their previous utility bills, not model the house."

That said, it's important to repeat that the modeling defects identified by Blasnik have nothing to do with occupant behavior.

Fascinating Article - Lets not throw the baby out with bathwater

Really interesting article. I'm actually a (relatively new) proponent of energy modeling - especially for gut reno and new buildings. It seems to me that the main issues with energy models (bad assumptions, bad algorithms) are fairly simply solved.

Make better assumptions. Use better models.

We aren't technologically frozen - energy models will improve (especially if we can be open source).

Look, nobody is arguing that there aren't regional builders out there with deep intuitive knowledge developed over years of experience. The simple fact is - not every designer has this engrained knowledge and respect for the vernacular (pop into a design school critique - vernacular design would be considered one step above the kid who draws like a two year old (sadly)) and not every designer practices in a single climate zone.

There is a recurring question of 'Is energy modeling cost effective?' In a vacuum, I don't know. But, as an architect, I don't see it as a singular service. I think of it as a design tool. Like sketching, or 3D modeling, energy modeling allows iterative idea proposal, testing and refinement. It is integral to the design process. Its not for every job (neither is 3D rendering), but its valuable for new buildings and gut renovation.

Arguably, REVIT (and CADD in the 80s) isn't exactly cost effective for all firms right now. It may not even be the most ideal tool for all projects. But architects and engineers will need to use it because its a valuable design and drafting tool that will only improve. In ten years, if you aren't using REVIT, you're going to be at a competitive disadvantage. I think the same applies for energy modeling.

As to this backlash against PHPP and Passive House (which has been shown to be an effective modeling tool). I have to be honest - the arguments are just a little, well, curmudgeonly. The critiques are tangential - 'so and so website made an incorrect claim (in this day and age! the horror)', and 'wouldn't we better off addressing climate change on a meta level'. Both of these may be true, but it really doesn't address what is the fundamental value of Passive House - it is a methodology aimed at bringing a PARTICULAR SECTOR (housing) into line in regards to Carbon emissions. Do we need to address energy on a holistic scale? Do we need to promote retrofit, especially in urban cores? Yes, yes and yes! But none of this is mutually exclusive to Passive House.

But, do you know what I think the real issue is? I think that builders and designers who have been doing GREAT work for years and years, don't like being told that, if you don't meet Passive House, you're not doing good enough. I don't have a response for that, but I suspect that, psychologically, this is an underlying issue.

Maybe we should hug it out.

Sorry for the long, rambling and slightly pugnacious post. I really love this site and love the discourse! I think we're all rowing in the same direction, we just have different types of oars!

Response to Grayson Jordan

Grayson,

I agree with most of your points. Your admonition -- "Let's not throw the baby out with bathwater" -- was similar to mine ("Don't throw your energy models out the window").

And I don't doubt that PHPP is an accurate modeling tool -- I just wonder whether the hours spent entering data are hours well spent.

Ready, Fire, Aim

There is an old expression: "Models model modelers," and it couldn't be truer in the case of building energy models. On the one hand, these models mirror their authors'; view of the world, plus, often more importantly, their users' skill in assigning inputs and interpreting the outputs.The results of a four-year-old Energy Trust of Oregon analysis referenced in Martin Holladay's recent post are more a product of how the models were used than what the models were capable of.

The Oregon study has been repeatedly invoked to make a series of points. But, citing a flawed study over and over doesn't make it true. In fact, such repetition does a distinct disservice to the building energy community--creating a mythology of misinformation.

Truly useful accuracy assessments couple a rigorous and transparent methodology with constructive forensics to help understand the sources of inaccuracies and provide fodder for improving the models. While deficient in these respects, the Oregon study also did not "accurately" characterize the building energy models. The authors chose not to heed review comments from developers of the REM/Rate tool pointing out specific deficiencies in the methodology and analysis. The final product was insufficiently documented to allow independent validation of the results. After publication, the Energy Trust of Oregon opted not to respond to requests for more transparent documentation to help identify the sources of asserted inaccuracies. What we can tell from the document is that the study hamstrung at least one of the tools--the Home Energy Saver (HES)--in multiple ways. Other problems with the experimental design are too numerous to go through here. We have now rerun HES against these same Oregon homes with greater quality control of the input data and full inclusion of known operational and behavioral factors. The median result agreed within 1% of the measured energy use, and with much lower variance between actual and predicted use than suggested in the Oregon study. For those who prefer not to consider occupant behavior ("asset analysis"), HES still predicts the central tendency of this set of homes much better than represented in the Oregon Study (but there is more spread in the results and many more outliers).

Unfortunately, the Oregon study (and more recent derivatives) has become the thing of urban legend: 'No reason to bother with detailed simulation. It is not accurate, nor worth the trouble.' However, in writing this response we wish to very strongly contest this conclusion and to let readers know that our recent research indicates the opposite is true. Not only do detailed simulations work well, they work better than simple calculations and provide greater predictive ability, especially when the more detailed operational level characteristics are considered.

Oddly, even taking the study at face value, perhaps its most important charts (not among those included in the GBA article), and other metrics found in the report show HES performing better than the other tools, including as defined by symmetry in the distribution of errors around the line of perfect agreement. This fact was de-emphasized in the study, and instead the readers' attention was directed primarily to average (rather than more appropriate median) results, coupled with a focus on "absolute" errors (obscuring problems of asymmetrical errors in some of the tools and inability to track realistic envelopes of energy use). All of this points readers toward a misinterpretation of the relative accuracy of the tools.

The refrain about simpler models producing better results is a red herring. Indeed, as show in the Oregon study, the more highly defaulted version of HES (dubbed "HES-Mid") had far less predictive power than the "HES-Full" version. Moreover, while HES offers many possible inputs (ways to tune the model inputs to actual conditions), many of them were skipped in the "HES Full" case, in lieu of set default values that were not representative of the individual subject homes. Additionally unclear to the reader, the Home Energy Saver does not in fact require any inputs other than ZIP code, thus leaving tradeoffs regarding time spent describing the house up to the professional using the model rather than to some remote third party. Of course, some inputs are more influential than others and analysts should focus on the ones that matter most for the job at hand.

That said, the amount of time that running a model "should" take (and the role of operational versus asset attributes) is a function of the purpose to which the results will be put and the definition and level of accuracy required.There is certainly no one-size-fits-all solution.This fact is rather glossed over in the article. User interface design also has much to do with the ease of model use and hence the cost. Moreover, thanks to long-term support from the U.S. Department of Energy, the Home Energy Saver is available at no cost to all users, which helps reduce the ultimate cost of delivering energy analysis services in the marketplace.

On the other hand, it is wishful thinking to suggest that simplified assumptions can capture the complex reality of estimating home energy and the potential for savings, and doing so results in a hazardous folk tale. Indeed, in a new peer-reviewed study of HES accuracy to be presented at the 2012 ACEEE Summer Study, we show that simulation is a very powerful means to improve predictions of how buildings use energy. Our analysis, however, heralds the uneasy conclusion that the importance of the household occupants and their habits is on a par with that of the building components and equipment.

Whether or not open source, it is important that these models not be "black boxes" and that the user community is free to discover what is happening under the hood. Extensive documentation of Home Energy Saver is open to public and peer review and suggestions on improving the methodology are always welcome. Public documentation of the other tools examined in the Oregon study is much more patchy.

All other things aside, many building energy models are in a state of constant improvement. Dredging up a dated analysis that was flawed in 2008 produces an even more flawed impression four years later as these tools have evolved. Indeed, this may have been the most useful result of that the Oregon study: to draw critical attention to model predictions in a number of areas: infiltration, hot water estimation, HVAC representation and interactions, thermostat uniformity etc. Suffice it to say, that observations from the microscope are now reflected in more powerful and robust simulations four years later. And that was a positive impact. Moreover, no one in the simulation community is standing still; further improvements are being made as our attention is drawn to further phenomenon. Did you suspect that interior walls might influence heat transfer substantially in poorly insulated homes? Or that basements are seldom ever conditioned to the same levels as the upstairs? We did. And addressing these shortfalls are bringing the space heating predictions of HES into ever-closer correspondence with actual consumption.

Beyond Oregon, we are looking at high-quality data sets that span the gamut of geographical variation and housing types: from cold Wisconsin to hot and humid Florida, often looking at fine-grain measurements of end-use loads from monitoring studies that allow further insight.

We fully agree that many nuances in building science may not be well reflected in a given model, and that bad inputs will yield bad outputs (garbage-in; garbage-out). These are areas of intense ongoing research and improvement in the Home Energy Saver tool at least. All would also no doubt agree that models are no panacea.The map is not the territory, but it can still help you get to where you want to go.

Simple models can provide rudimentary insight and that should not be under valued. However, the most detailed tools of today can help one to fathom the deeper influences that determine energy use in our homes. Yet, they teach this with a price for admission, suggesting that understanding comes not from simplicity, but rather from its opposite. If you are out to describe the truth, Einstein famously said, leave elegance to the tailor.

Evan Mills, Lawrence Berkeley National Laboratory

Danny Parker, Florida Solar Energy Center

Discussion continued here.

reply to Evan and Danny

Reading your post made me wonder if we've both been working in the energy efficiency field for as long as I know we all have. The Oregon study was not the first or only study to find major problems with energy use predictions from standard (complex) building energy models -- Martin even mentions the Wisconsin HERS study as well as results from retrofit savings studies that I brought up in my talk. If you talk with almost any thoughtful person who has run energy models on many older homes in the real world and then looked at the energy bills, you would have been hearing the same thing over and over for years -- you can't trust the model's energy use estimate or savings estimates. Heck, I've even run my own (100 year old) home through REM and HES and both over-estimated my heating use by more than 40%.

You can't really blame these huge errors on occupant effects or auditor data entry errors when you find large and systematic biases -- houses with low insulation levels and high air leakage rates use much less energy than "standard" model predict. There are reasonable explanations for why these errors are there and fairly easy ways to reduce the errors.

The model estimates of heat loss through uninsulated walls and attics and from air leakage (to name a few) were very poor. If you estimate retrofit savings from reducing air leakage by 1000 CFM50 or insulating uninsulated walls using these tools (or at least the versions from a couple of years back), you get estimates that are far larger than all of the empirical data from impact evaluation studies.

The Oregon study was expected to help quantify how much accuracy you lose when using a simplified model. The finding of apparently better accuracy was a surprise to many. But the SIMPLE model was only more accurate on these homes because it didn't make the same huge errors as other models . The fact that these errors existed for so many years is a testament to how either no one seemed to look at this stuff or else perhaps it is due to an unflagging near-religious belief in the accuracy of models which leads model makers to attack and dismiss any empirical data that contradicts their models.

The lessons from the Oregon study are not that simpler models will always work better than complicated models -- obviously if you have more detailed and accurate inputs for a model it should be able to make better estimates of energy use if it uses good algorithms (still a big if). But the lesson was that the current complicated models -- including REM and HES -- were remarkably inaccurate in modeling older homes and that if you don't get the big things right it doesn't really help to do a good job on the many small details -- in fact they become a waste of time.

Speaking about getting the big things right, I'm glad to hear that you've used the results of the Oregon study to actually revisit some of the assumptions and methods in Home Energy Saver. But I'm a little confused about how such a deeply flawed study -- one in which you claim the models are actually unbiased when done properly -- could help identify model flaws. Regardless, it's good to get past stage 1 -- denial -- and move through the remaining stages towards acceptance as quickly as possible.

When used in the real world of fixing homes, energy modeling should be subjected to the same cost-effective criteria as retrofit measures. It is up to the models out there to demonstrate that they provide useful information that is worth the time and effort to collect the data and run the software. I think that -- even when the models are improved and better reflect real world energy use -- people will still find that elaborate modeling may not be worth the effort very often. The best modeling software will only ask for things that are important and measurable, provide answers that are consistent with empirical data, and have a streamlined user interface to make it a useful tool rather than an administrative burden. I think we may all agree on that.

Who's to blame when complicated models give the wrong answer?

Thanks, Michael, for your detailed response.

To Evan Mills and Danny Parker: thanks to both of you for your many years of research into the issues we're discussing here. I'm honored to have you comment on this blog, and am delighted that GBA can be a forum for this important debate.

I'd like to comment on one of your assertions: namely, that in order to obtain more accurate modeling results for the houses used in the Oregon study, you have "rerun HES against these same Oregon homes with greater quality control of the input data and full inclusion of known operational and behavioral factors."

In other words, if the answer is wrong, then clearly it's necessary to adjust the inputs.

I hear that the same approach is used with another well-known modeling program, WUFI. John Straube warns untrained users to be careful of WUFI, because any WUFI results need to pass the smell test. (In other words, if WUFI says that a certain type of wall will fail in a certain climate, and remodeling contractors working in that area know that walls of the same type are not failing in the field, then it is necessary to go back and tweak the WUFI inputs until the results pass the smell test.)

When I hear researchers tell me stories like this, I conclude that the model isn't very useful.

More on the subject...

Martin,

There was no "input massaging" that took place in the re-analysis of the Oregon data. The only adjustments, of which I am aware, were that cases with wood heat and supplemental electric space heat were censured-- a reasonable precaution to help examine the accuracy of end-use prediction.

However, a great many adjustments were made to the simulation algorithms over the last years-- many of these in response to greater scrutiny of comparing model to billing records. If one wishes to assign guilt to that process, then we (and the entire simulation community) are fully at fault. As mentioned, in the blog entry, I credit the Oregon study with starting the ball rolling on that process. I just don't think that you should condemn simulation for limitations that may have been addressed-- and are, indeed, being continually improved.

To me, I see such changes as signs for optimism and encouragement. We are doing better than ever before and ferreting out the various factors that cause the models not to work so well. Right now, HES is predicting quite accurately for space heating where we have data on space heat end-uses.

Electricity is more problematic, perhaps because electricity use, itself, is a more stochastic process and less accessible to prediction.

Again, simulation models are powerful: HES, BEopt or EnergyGauge can clearly show a user that energy efficiency lighting is critical in the south where lighting savings are trumped by additional cooling systems. On the other hand, wall insulation may be a better early retrofit option in Minnesota where part of the lighting savings are lost due to increased heating.

Of course if users want to plan zero energy homes using rules of thumb and personal divining rods, then be my guest.

I'll put my faith in physics and detailed modeling.

Danny Parker

Florida Solar Energy Center

Response to Danny Parker

Danny,

Thanks for your further comments. I'd like to emphasize what I see as our points of agreement:

● The Oregon study revealed defects in some of the algorithms in HES and REM/Rate.

● Due in part to the Oregon findings, the HES algorithms have been improved.

● While energy modeling may not be appropriate for every energy retrofit project, it is useful for designers of custom zero-energy homes.

How detailed is detailed enough?

Danny-

it may surprise you, but the SIMPLE spreadsheet model does include the interactive effects between lighting and heating and cooling loads. it also includes the heat/cool interactions for hot water, plug loads, etc. -- and also the interactions between cool roofs / radiant barriers and duct system efficiencies and attic heat gains/losses.

I guess you could say that SIMPLE is only simple when it comes to reducing the number of required inputs and avoiding hourly simulations. But it is actually fairly complex under the hood. I tried to include significant interactive effects throughout. It's not at all clear that you need to use an hourly simulation or collect a lot of extra data elements to capture these effects reasonably well.

Modeling use cases

So why do we model currently and do the modeling tools actually support those use cases? Simply tossing rocks at modeling in general is doing a disservice to the industry when there are actually strong use cases for modeling. That is not what Michael is doing, but it is easy for modeling critics to make it seem that way.

So what are the basic use cases for modeling?

We model to design efficient new homes where potentially each feature could be changed to improve energy use and there is no historical usage for comparison. Seems like detailed modeling might be useful here and it sounds like the models are actually OK at this. You can get picky about which model to use and how each of the models support calculating really low energy use but that is a matter of personal taste and the style of building to some extent. I understand that there is testing underway to compare REM to the Passive House Model to allow REM to be used in that program.

We model to help make us support decisions about retrofit options. We have a billing history to help us improve our pre-retrofit model (if we make use of the billing data to calibrate the model and find data entry errors) and we learn a lot about what saves energy and what does not. But after doing a lot of models do I really need to keep modeling? What more am I learning? Not much. But in my experience, those building performance contractors who have not modeled some good number of homes (50-100?) are not as strong at understanding where to cost effectively save energy and tend to over design energy saving solutions for customers, spending more of the customer’s money unnecessarily. So modeling to learn is good. But modeling once we have learned may not be as valuable.

We model to get incentives. Programs that want to encourage deep retrofits like to use modeling to control access to dollars. That way you get to design the best solution for a home. Deemed savings programs do not fit well with home performance approaches because they tend to have you putting things into homes that do not need them just to get incentives. Other funding sources, such as Congress, like performance based incentives because everyone wins (most everyone anyway). They don’t have to go through getting lobbied by different industries to their energy saving solution included in the law. At a different session at ACI, Jake Oster of Congressman’s Welch's office stated this explicitly. We currently have two national incentive programs introduced with bi partisan support that depend on modeling. Boy wouldn’t that make an impact if these passed! And since they create jobs and have bi partisan support they have a not so terrible chance at passing.

Is the modeling process for getting incentives robust enough to trust with determining incentive values? The proposed laws reference several requirements that were designed to make the process robust and enforceable through quality assurance. First, they require that the software pass the RESNET Audit Software standard. These are a set of physics tests that stretch the tools. Stretching is a good test for relative model robustness but this type of testing is different than the accuracy tests that measure both user error (often complexity driven) as well the performance of the physics at the mean. These tests can and will be improved but they are the best available solution now for a performance based (meaning developers can run the test themselves which helps you develop faster and less expensively) accuracy test that can be referenced as a standard in legislation.

Second, we need to establish a baseline for the energy usage in the building. This is what the incentive dollar amount will depend on. But guess what, we have a simple method for getting a baseline. It is the existing energy bills. If we subtract the weather normalized energy use from the building that has gotten a whole house improvement, we ought to be fairly accurate. The real bills are the real bills after all and we have data showing that improved houses model better than house with all sorts of problems. It is the problems that are the problem. They are hard to model correctly since there are lots of inputs needed, many of the inputs are hard to measure so we estimate them and tend to estimate high and all these assumptions about problems combined with any errors in the modeling software for the actual conditions combine to make it tough to model poor performing homes. But for an incentive calculation we can actually ignore these issues. We can make the baseline the weather normalized actual bills.

But there is an interesting thing. An energy model calibrated to the actual weather normalized bills is functionally the same as the weather normalized bills. And if we calibrate the model we get the extra benefit of eliminating a lot of gross user error that otherwise creeps into the models. So we solve two things at once, we get an accurate baseline and we improve model quality.

BPI has worked with RESNET and a group of industry contributors to create an ANSI standard for this calibration process. This standard is what is referenced in the legislation. The joint BPI and RESNET effort here was considerable and very important. Congressional staff did not believe we could make this joint effort work but we did and congratulations are due to the participants. The joint effort also means that the bill is more likely to pass. The President has endorsed these efforts in his budget, getting the attention of DOE in the process.

Other programs besides Congress use performance based incentives. These programs would benefit from setting baselines using model calibration also. Efforts like Green Button and utility connections to EPA Portfolio Manager also improve access to the energy information needed to do the calibrations. Other efforts like HPXML will make it possible to choose modeling tools and to collect data outside of modeling tools and import that data into modeling for incentive access. There is a lot of infrastructure growing that will make performance based incentives more cost effective to perform and administer.